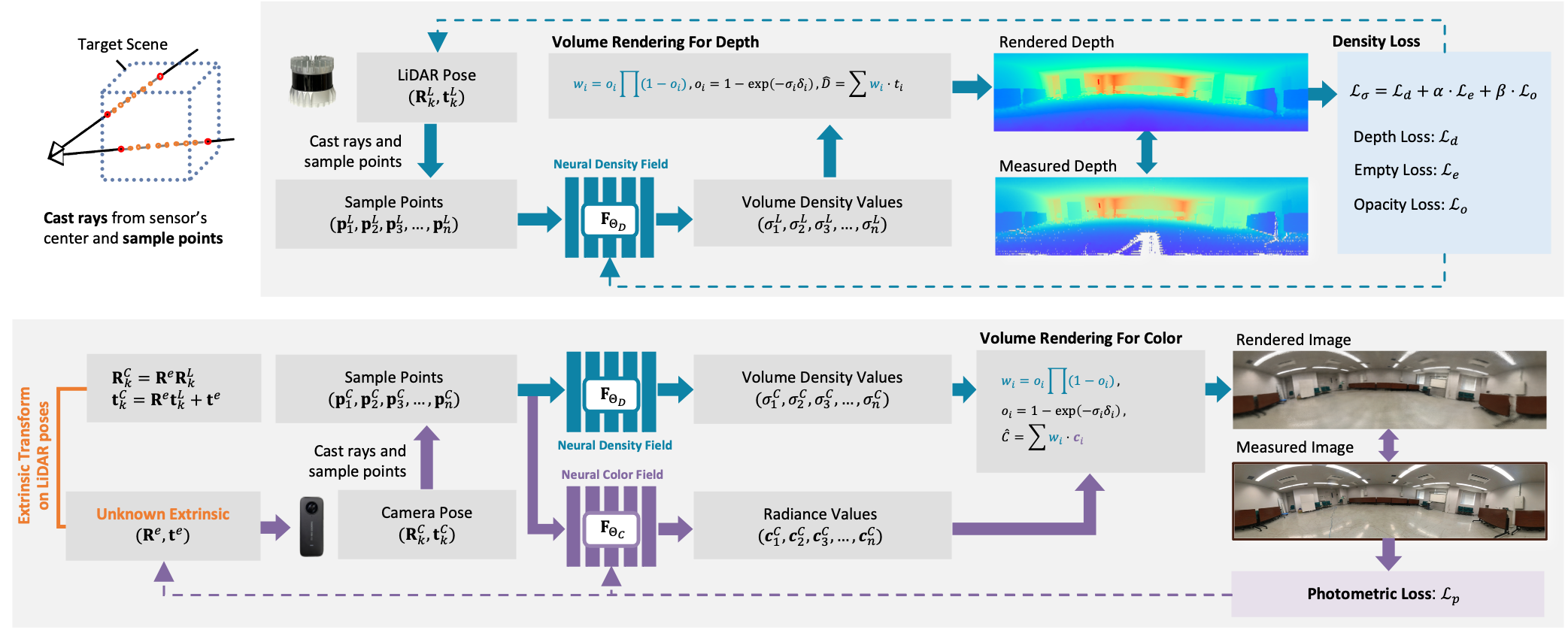

Neural Density Field

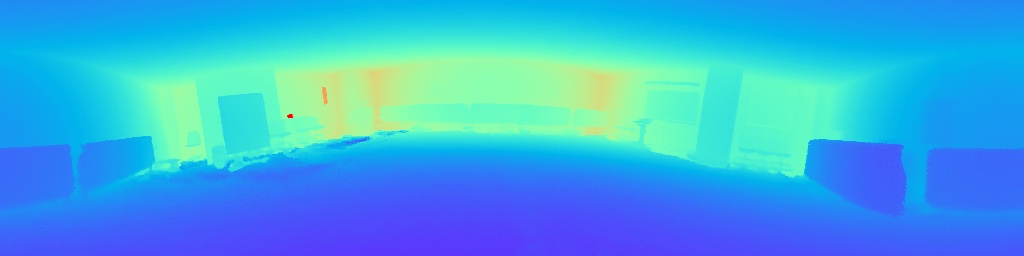

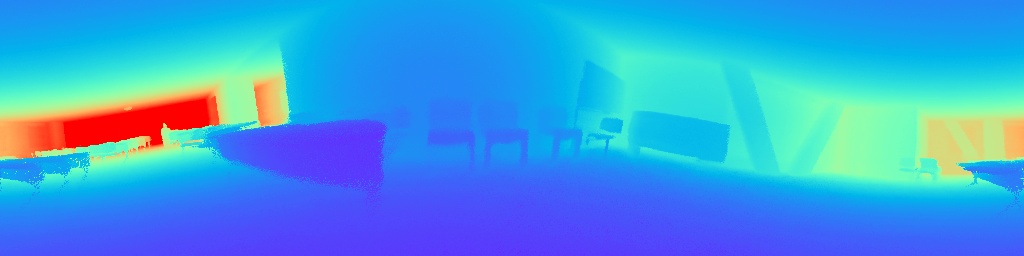

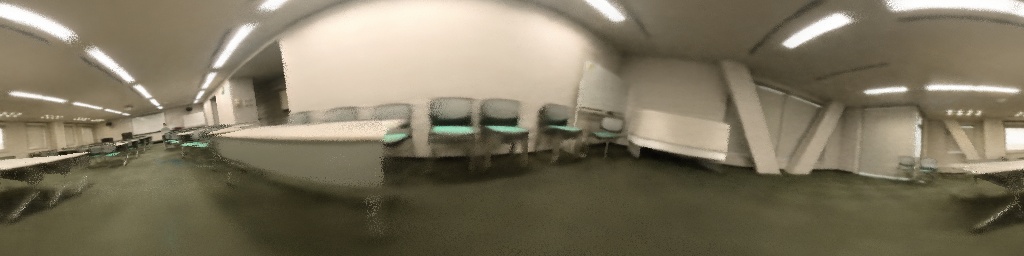

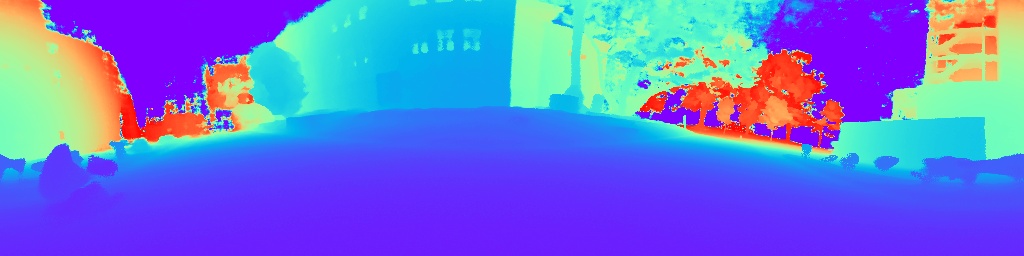

We use LiDAR measurement data to train a neural density field that can represent the space geometry. Neural density field outputs the densities of sampled points, which can be integrated using volume rendering to calculate depth values of LiDAR rays. Notice that LiDAR poses can also be estimated in a sequential process using the neural density field.